# Your RAG Chatbot Fails Because You Built It in the Wrong Order

Most RAG chatbots don’t fail because of bad models.

They fail because the system was built in the **wrong order**.

You start with prompts.

Then tweak chunk sizes.

Then switch embeddings.

Then add “one more trick.”

And somehow, the answers still feel unstable.

This isn’t bad luck.

It’s a predictable outcome of building from the wrong end.

---

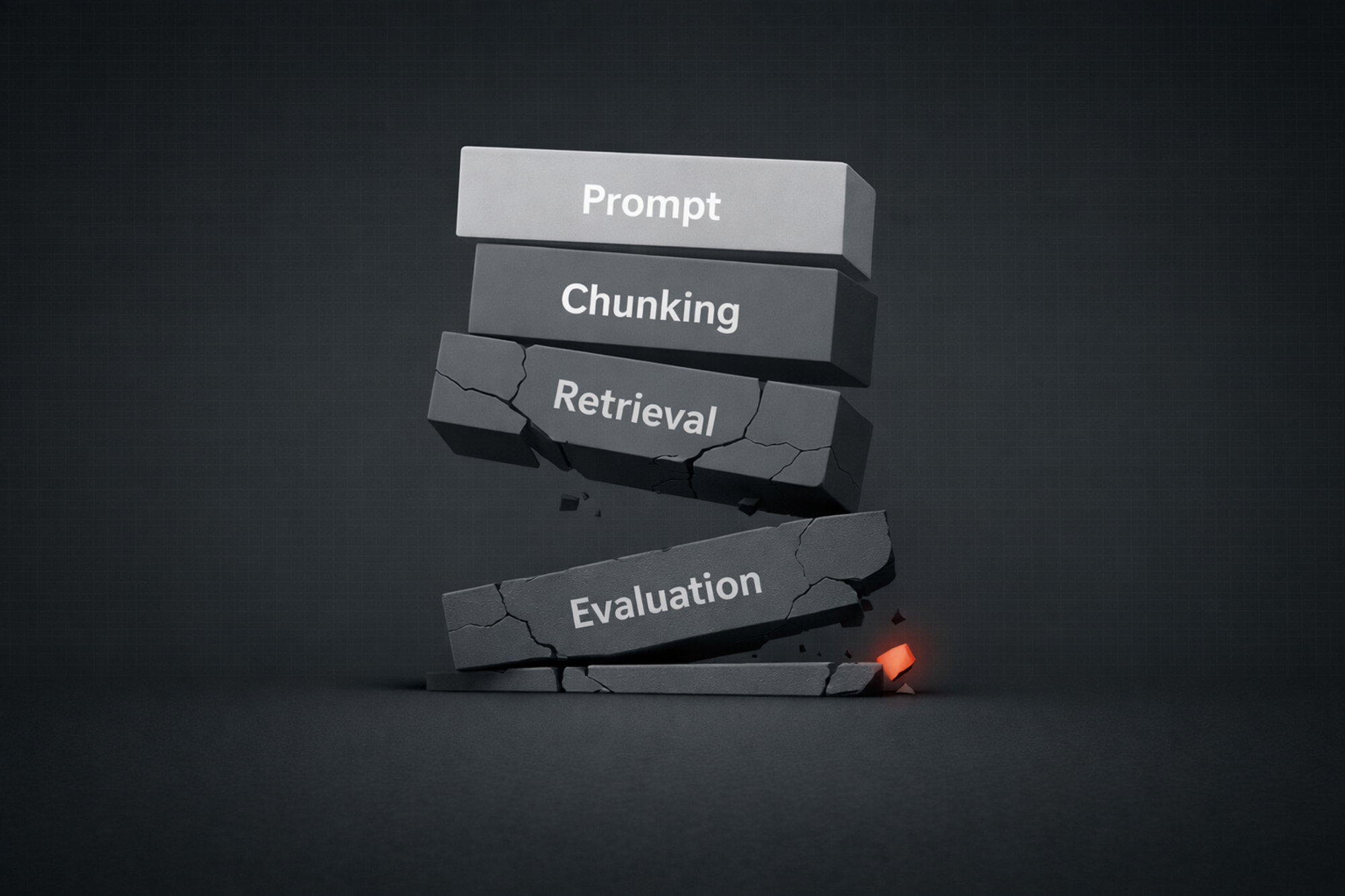

## The Common (Wrong) Build Order

Most RAG systems are built like this:

1. Pick a model

2. Write a clever prompt

3. Add a vector database

4. Tune chunking

5. Hope it works

This order feels natural — because it’s demo-driven.

But in production, it guarantees failure.

Why?

Because **you’re optimizing generation before you understand retrieval**.

---

## The Core Mistake: Prompting Before You Can Measure

Here’s the hard truth:

> If you prompt before you can measure retrieval quality, you’re building blind.

In most systems:

- retrieval quality is unknown,

- chunk relevance is invisible,

- failures look like “model hallucinations.”

So teams do the only thing they can:

they tweak prompts.

That’s how RAG projects get stuck in infinite prompt iteration — while the real problem sits upstream.

---

## The Correct Build Order (That Actually Works)

If you want a RAG system that survives beyond the demo, the order matters more than the tools.

Here’s the sequence that works.

---

## Step 1: Define Failure First

Before writing any code, answer this:

- What does a *bad* answer look like?

- When should the system refuse to answer?

- What does “good enough” mean?

If failure isn’t defined, the system will fail silently.

Production RAG systems don’t aim to always answer.

They aim to **fail correctly**.

---

## Step 2: Make Retrieval Visible

Before prompts.

Before UX.

Before “AI magic.”

You must be able to see:

- which chunks were retrieved,

- their similarity scores,

- their sources,

- and their ordering.

If you can’t inspect retrieval, you can’t debug anything downstream.

At this stage, generation can be:

> dumb, ugly, even temporary.

Visibility comes first.

---

## Step 3: Create a Tiny Evaluation Set

You don’t need a massive benchmark.

You need:

- 20–30 real questions,

- expected answers,

- and expected sources.

This becomes your **regression harness**.

Every change you make later — embeddings, chunking, rerankers — must pass through this set.

Without evals, improvement is a feeling.

With evals, it’s a decision.

---

## Step 4: Lock Chunking and Indexing

Now — and only now — do you touch chunking.

Why?

Because chunking defines:

- recall,

- context density,

- and hallucination risk.

Once chunking + indexing are locked:

- you stop rebreaking the system accidentally,

- retrieval behavior stabilizes,

- iteration becomes meaningful.

Most teams do this step first.

That’s backwards.

---

## Step 5: Add Generation Last

Generation is the *final layer*.

At this point:

- retrieval works,

- failure cases are known,

- evals exist,

- latency is measurable.

Now prompts actually matter.

This is where:

- prompt structure,

- citation formatting,

- refusal logic,

- and tone control

make sense.

Before this step, prompts are just decoration.

---

## Step 6: Then (and Only Then) Add Complexity

Agents.

Rerankers.

Decision logic.

Tools.

All of these assume the foundation is solid.

If you add them earlier, they amplify chaos — not capability.

---

## Why This Order Feels Uncomfortable

This build order feels slow at first.

There’s no flashy demo.

No impressive UI.

No “wow” moment.

But it compounds.

Instead of constantly restarting, you **stack progress**.

That’s the difference between:

- a prototype that impresses,

- and a system that survives.

---

## The Real Lesson

RAG systems don’t fail because they’re hard.

They fail because teams start at the end.

If you build:

- prompts before retrieval,

- UX before failure modes,

- answers before measurements,

you’re optimizing noise.

---

## Final Thought

If your RAG chatbot only works when:

- retrieval is perfect,

- prompts are handcrafted,

- and no one asks the wrong question,

then the system isn’t unfinished.

It’s misordered.

And misordered systems don’t get better with time —

they just get more complicated.

---

### Related posts

- **Why Most RAG Projects Die After the Demo**

- **What to Fix First When RAG Fails**

- **Chatbot Evals That Actually Matter**

- **The Only RAG Diagram You Need**

Build order isn’t a detail.

It’s the architecture.