# Why Most RAG Projects Die After the Demo

RAG demos are dangerously convincing.

You upload a few PDFs, ask a question, and the model answers perfectly.

Everyone nods. Someone says _“this is it.”_

And then… nothing.

Weeks later the project stalls, answers degrade, users stop trusting it, and the system quietly gets abandoned.

This isn’t because RAG doesn’t work.

It’s because **most RAG systems are built for demos, not for reality**.

I’ve seen the same pattern repeat over and over — and once you notice it, you can’t unsee it.

---

## The Demo Is Optimized for the Wrong Thing

A demo answers one question well.

Production systems must:

- handle bad queries,

- survive missing or conflicting documents,

- scale across changing data,

- and fail **predictably**.

Demos are optimized for **impression**.

Real systems need **resilience**.

That mismatch is where most RAG projects die.

---

## Failure #1: Retrieval Is Treated as a Black Box

In demos, retrieval is usually:

- a vector store,

- default chunking,

- `top-k = 5`,

- no inspection.

It looks fine — until it isn’t.

In production, the model doesn’t fail first.

**Retrieval does.**

Bad chunks in → confident nonsense out.

And because retrieval isn’t observable:

- nobody knows _why_ answers are wrong,

- debugging turns into prompt tweaking,

- trust erodes fast.

If you can’t answer:

> “Which chunks were retrieved, and why?”

You don’t have a system.

You have a magic trick.

---

## Failure #2: No Evaluation Loop Exists

Most demos have:

- zero benchmarks,

- zero regression tests,

- zero metrics beyond “sounds right.”

So when something changes — new documents, new embeddings, new prompts — no one knows if the system improved or got worse.

RAG without evaluation is guessing at scale.

In production, you need:

- retrieval quality metrics,

- answer grounding checks,

- latency tracking,

- failure categorization.

Without these, the project doesn’t break loudly.

It slowly **rots**.

---

## Failure #3: Latency Is Ignored Until It’s Too Late

Demos run on:

- small datasets,

- local machines,

- ideal conditions.

Real users don’t wait 12 seconds for an answer.

Every added step — embeddings, retrieval, reranking, generation — compounds latency.

By the time users complain, the architecture is already wrong.

Latency isn’t a performance detail.

It’s a **product decision**.

If you don’t budget for it early, the system never recovers.

---

## Failure #4: The System Has No Failure Mode

In demos, the model always answers.

In production, it shouldn’t.

Good RAG systems know when to:

- say “I don’t know,”

- ask for clarification,

- return partial answers,

- or surface missing data.

Most systems don’t.

So when retrieval fails, the model hallucinates — confidently.

That’s the moment users stop trusting it.

And once trust is gone, the project is already dead.

---

## Failure #5: The Architecture Can’t Evolve

The final killer is rigidity.

Many RAG demos are built as:

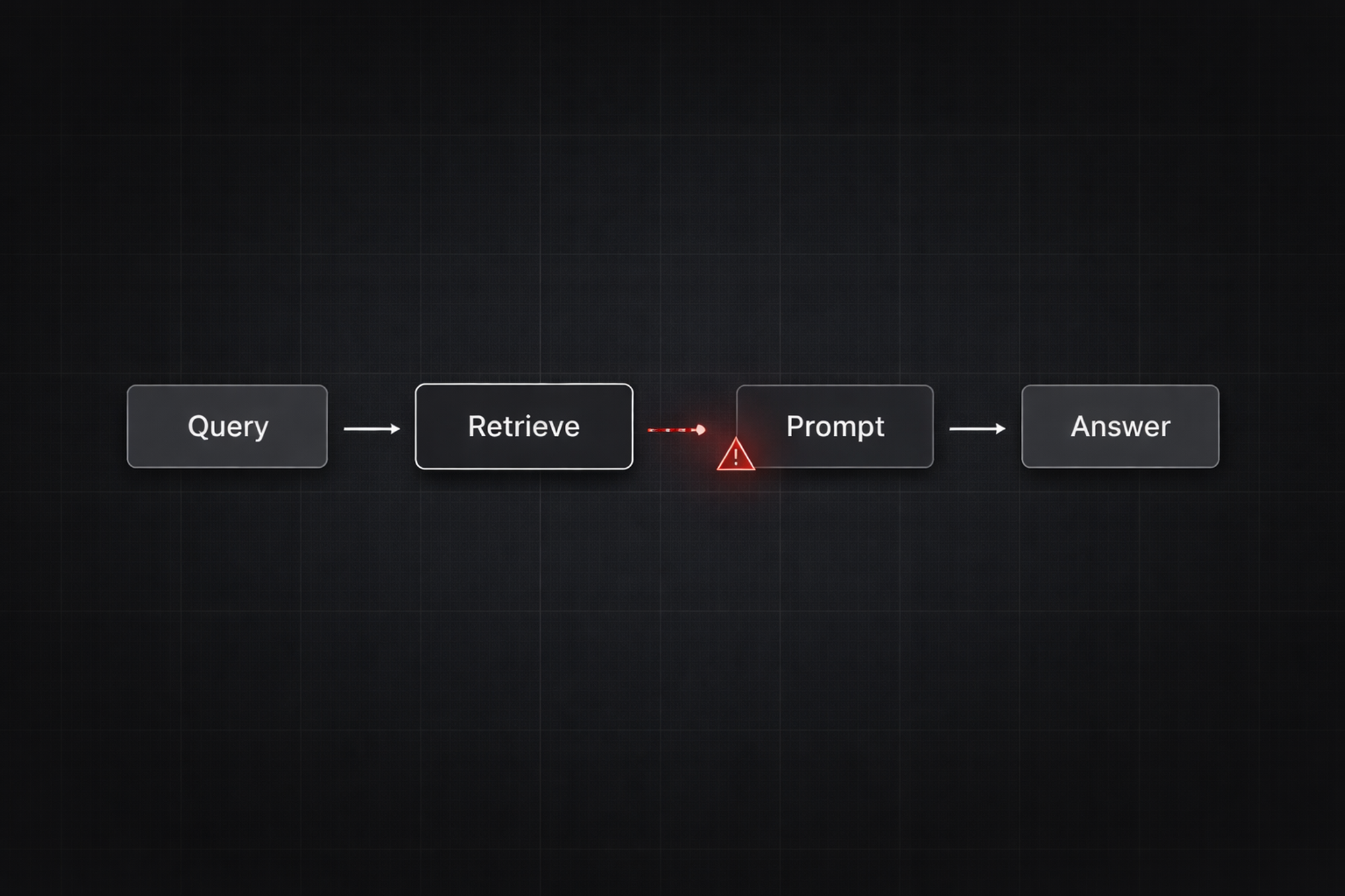

query → retrieve → prompt → answer

That works — until you need:

- citations,

- multi-step reasoning,

- decision logic,

- or agent behavior.

At that point, teams try to bolt on complexity — and everything collapses.

RAG systems don’t fail because they’re complex.

They fail because they **weren’t designed to grow**.

---

## Why the Demo Still Matters (But Only as a Trap)

Here’s the uncomfortable truth:

Demos are necessary.

But they’re also misleading.

They prove the **idea**, not the **system**.

A successful RAG project isn’t defined by:

- how good the first answer looks,

but by:

- how the system behaves when things go wrong.

---

## What Actually Survives in Production

The RAG systems that survive share a few traits:

- Retrieval is observable and debuggable

- Evaluation exists from day one

- Latency is treated as a hard constraint

- Failure is explicit, not hidden

- Architecture assumes change

These aren’t optimizations.

They’re prerequisites.

---

## Final Thought

If your RAG project only works when:

- the data is clean,

- the query is perfect,

- and nothing unexpected happens,

then it’s already dead.

It just hasn’t failed loudly yet.

The demo isn’t the finish line.

It’s the **most dangerous part of the journey**.

---

### Related posts

- **RAG Systems Fail Before the Model Even Runs**

- **Why Latency Kills AI UX**

- **Chatbot Evals That Actually Matter**

This is where real AI systems are built — not on stage, but in the messy space after the applause ends.